Social engineering operates at the crossroads of psychology and technology. It manipulates human behaviour using psychological trickery to bypass technical defenses. It is a cyberattack type with a terrific success rate.

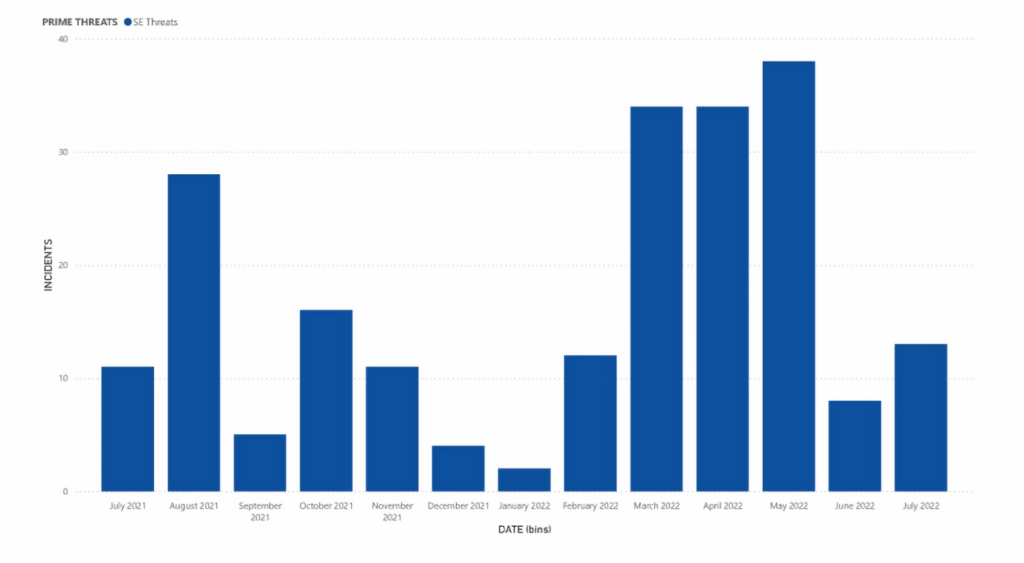

In 2021, ENISA received over 300,000 reports of social engineering attacks targeting European users. This represents a 15% increase from the previous year.

Another survey, involving 1000 Americans revealed that 84% of them had been exposed to a socially engineered cyberattack and 36% of them fell prey to it. 18% of the lot got email accounts and financial accounts hacked and 11% got scammed into investing money in bogus schemes.

The most common targets of social engineering attacks are individuals and small businesses. However, large organizations are also at risk. For example, in 2013, the Target Corporation was the victim of a major social engineering attack that resulted in the theft of the personal information of over 40 million customers.

For an organization, each employee with access to the organizational network, tools used collaboratively, and any communication that may contain confidential data, is a potential vulnerability. C-suite executives are also susceptible to social engineering attacks, in fact, some attack types are specifically designed to target authoritative figures.

Any countermeasure for social engineer attacks must start with the understanding of the psychological underpinnings that allow malicious actors to manipulate their victims into divulging information or taking unwitting actions.

Social Engineering Psychological Manipulation: Unmasking Mind Games

The human mind with all its remarkable capabilities, harbors inherent vulnerabilities that social engineers can. These weaknesses stem from a combination of cognitive biases, emotional triggers, and social instincts. Social engineers apply the principles of persuasion to these biases in order to get confidential information or to breach information security. The research of Dr. Robert Cialdini on persuasion is seminal in terms of understanding the relationship between social engineering and human psychology.

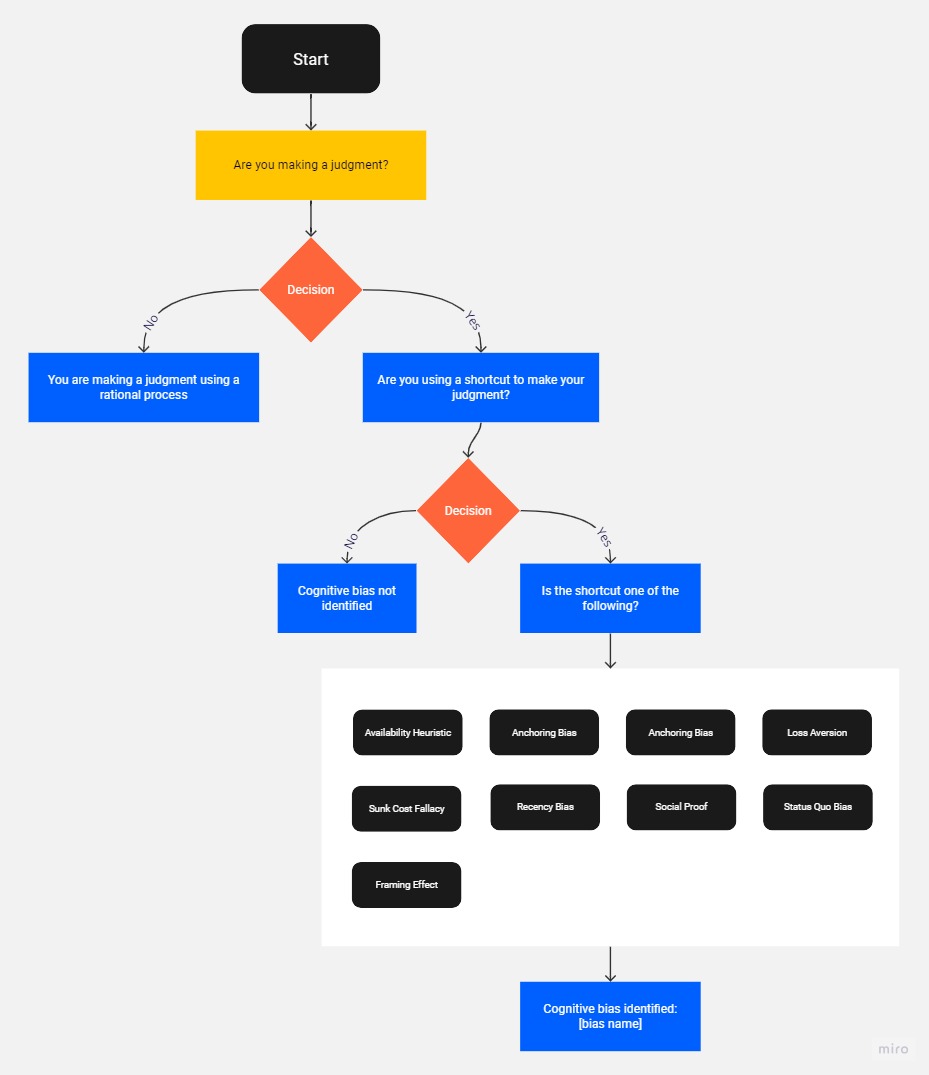

Cognitive biases

The human brain relies on cognitive biases for the efficient processing of vast amounts of information. For instance, human beings have a confirmation bias where individuals seek information that confirms their existing beliefs. If hackers are able to gather enough information about an individual or a class of individuals, they can tailor misinformation to feed the victims’ cognitive biases.

Availability Heuristic

People tend to judge the likelihood of an event based on how easily they can recall a similar event from the past. It may result in the overestimation of the frequency of an event.

Anchoring bias

Humans like to base their decision on the first piece of information they get about something even if it is proven to be misleading.

Overconfidence bias

People overestimate their own ability to make accurate judgments and predictions. It often causes them to ignore potential pitfalls.

Loss aversion

The pain of loss is felt more strongly than the pleasure of gains. This tendency deeply influences people’s risk-taking patterns.

Sunk cost fallacy

People tend to keep investing in an idea, decision, or project based on the resources already invested even if the venture isn’t paying off.

Recency bias

Humans like to prioritize recent information over historical records or long-term patterns.

Social proof

When met with uncertainty humans are influenced deeply by decisions made by others so much so that it subdues their independent reasoning.

Status quo bias

People prefer the sub-optimal current situation to a better alternative that involves change.

Framing effect

The way information is framed can alter the decisions made based on it.

Apart from cognitive biases, there are a lot of other psychological traits that can be exploited in a social engineering attack.

Authority and obedience

Humans have an innate respect for authority and they have a tendency to obey authority mostly without questioning it. Social engineers leverage this phenomenon to coerce victims into taking actions that may compromise their data or system.

Attack scenario

- A hacker gathers information about the senior management of an organization.

- The hacker impersonates a senior manager and sends an email to a lower-level employee.

- The email explains an urgent situation demands access to certain sensitive files.

- Obeying a direct order from an authoritative figure, the employee shares sensitive information.

Reciprocity and Trust

People like to pay what is owed. Attackers offer a small favor to build trust and then ask for a compromise.

Attack scenario

- An individual gets an email from a reputable source informing them about an urgent security update for some software they use.

- It also states that a customer support executive is available to guide them through the process on call.

- The victim makes the call and the attacker builds trust by showing knowledge.

- The attacker asks for user credentials in order to initiate the update.

- The individual provides the credentials out of trust and reciprocity.

- The attacker shares a fake installation link.

- The victim clicks on it and downloads malware that gives the attacker remote access to the victim’s computer.

Fear and emotional triggers

Primal emotions like fear, greed, and curiosity, if triggered effectively, can easily bypass rational decision-making. Social engineering attacks often use these emotional triggers to make people click on links or download files that they would not touch otherwise.

Attack scenario

- A user gets an email claiming that their personal information has been compromised and is at risk of being leaked. The email refers to sites the individual has visited lately to make the claim more convincing

- The email establishes urgency and explains the consequences of inaction. It also provides a link to take action.

- Driven by heightened fear of financial or reputational loss, the user clicks on the link which triggers the download of malware.

Scarcity principle

People attach more value to resources, opportunities, and items that they believe are rare and available in limited quantity for a limited time. This is one of the key cognitive biases for social engineers.

Attack scenario

- The user gets an email from a reputable online retailer.

- The email offers an exclusive discount on a highly sought-after product (maybe something the user has searched for on the Internet) owing to their “special-customer” status.

- The offer is for a limited period of time and needs immediate action. There is a link.

- The user clicks on the link and a new page opens that looks almost identical to the legitimate retailer’s site.

- The user inserts personal and financial information to claim the discount.

- The email was from an attacker, the site was a clone, and the user just became a victim of phishing.

The role of technology in hacking human psychology

Technological advancement has equally benefitted hackers and cybersecurity experts. Technical security measures have come a long way to counter improved methodologies of cyberattacks. The human aspect of information security, however, is still the weakest link. And the advancement of technology has brought more challenges for us.

More sophisticated phishing attack sites

Even half a decade ago, a good look at a phishing site would betray its illegitimacy. The theme of the site would be off, it would have an easily discernable domain name, and there would even be spelling and stylistic errors in the language.

Not anymore. Few websites are developed from scratch anymore. Companies use templates and pre-designed themes. These templates and themes are transferrable. Hence, hackers do not find it very hard to create a convincing phishing website complete with the right colors, matching fonts, and overall layout.

Now, with generative AI in the equation, phishing emails are far more accurate. In fact, it is possible for hackers to imitate the speech patterns used by individuals.

Consider this, a hacker can easily pick up samples of an individual’s writing or speech from social media platforms. They can then create content that incorporates those elements by feeding the GPT with collected speech data.

It is hard to tell a legitimate and a phishing email apart even for trained personnel. That is also why having a holistic picture of social engineering and its premises is so important.

Improved Spoof with AI voice

We have already discussed the role of authority and trust in persuading people into taking action or divulge sensitive information. However, if a senior manager asked an employee to share confidential files over email, a relatively calm individual might just call the said senior before obeying the order.

But what if the instruction itself was given through a voice call? A combination of AI voice and caller-ID spoofing can easily make this happen causing even aware individuals to fall victim to socially engineered attack methods.

Better user profiling

Data analysis is cheaper and more accessible today than ever. It allows hackers to scrape personal information from various publicly available sources and filter the data based on specific traits. It allows attackers to launch better-targeted spear phishing attacks.

It is safe to say that the advancement of technology especially in the fields of data analytics and artificial intelligence has increased the vulnerability to social engineering in many cases. It is easier now to identify an individual’s psychological triggers. The process of pretexting is more efficient, and human interaction is far better in terms of accuracy and tone.

Decreasing the reliance on humans for cybersecurity

Expecting that with any amount of awareness, human beings can muster a flawless defense against social engineering is unfair. And even if someone achieves the capability of resisting all kinds of social engineering attempts, it is counter-intuitive for businesses to rely on that.

The solution: bypassing the human element

Employees cannot divulge sensitive information if they do not have it. If an organization uses the principle of least privilege to control access to resources using a strong access management system and stores all credentials in an encrypted vault, a large part of the problem is solved.

Consider this, the administrator assigns an employee role-based access to a specific application by sharing their email address with the access management tool. Now, when the employee tries to log into the account, the access manager takes control.

It provides the credentials and fetches the 2FA data, thus eliminating the human element completely. Not only does this enhance security but also improves efficiency as workers no longer have to worry about passwords.

Everything we’ve discussed above only scratches the surface of what Uniqkey offers. Dive deeper and discover the full potential for yourself. Start your 14-day free trial now and empower your employees with Uniqkey’s unmatched capabilities