On March 13, 2024, the European Union Artificial Intelligence Act was approved by the European Parliament, in a landmark move that marks the world’s first comprehensive set of AI regulations.

The legal framework of the Act is geared towards promoting innovation while establishing minimum standards of safety for AI within the EU going forward.

Already, AI is revolutionizing fields like healthcare, transportation, scientific research, and beyond through its ability to process vast amounts of data, identify patterns, and derive insights at a speed and scale unfathomable to the human mind alone.

On the other hand, the existential risks and ethical quandaries posed by advanced AI systems are just as massive as the potential upsides.

With regulations now shifting from aspiration to concrete enforcement, the Act will have profound and far-reaching ramifications not just within Europe, but quite likely serving as a template for how other international organizations and national governments approach AI governance in the years ahead.

In this article, we will look into the details of the new AI Act, exploring its provisions, implications, and what it means for the future of AI development as well as safety and cybersecurity.

Brief History of EU Regulations on AI/Technology

Although it seems the entire world woke up to the reality of what AI is capable of at the end of 2022 when ChatGPT was launched, many governments and institutions have been wary of its potential long before then and have commenced instituting steps to regulate AI innovation.

So, while the AI Act might be seen as a new development, it has quite a long history that predates even the timeline stated here. The EU has been musing about AI regulation for quite some time as well as defining acceptable uses of artificial intelligence, especially for commercial and public purposes.

Thus, the publication of the European Commission’s White Paper on Artificial Intelligence could be considered the immediate first step to eventually developing a set of regulations guarding AI deployment. What follows is a summarised timeline of incidents leading up to the passage of the law:

- February 2020: The European Commission published the White Paper on Artificial Intelligence, intending to define AI and highlight its benefits and risks

- October 2020: EU leaders discussed AI and the European Council invited the Commission to, among other requirements, “provide a clear, objective definition of high-risk artificial intelligence systems.”

- April 2021: The European Commission published a proposal for an EU regulation on AI, officially the first document serving as a precursor to the AI Act

- December 2022: The European Council adopted its position on the AI Act and allowed negotiations to begin with the European Parliament

- December 2023: The EU Council and the Parliament held a series of talks for three days, after which they reached a provisional agreement following months of negotiations

- March 2024: The AI Act was officially passed by the European Parliament with an overwhelming majority

Provisions of the New EU AI Act

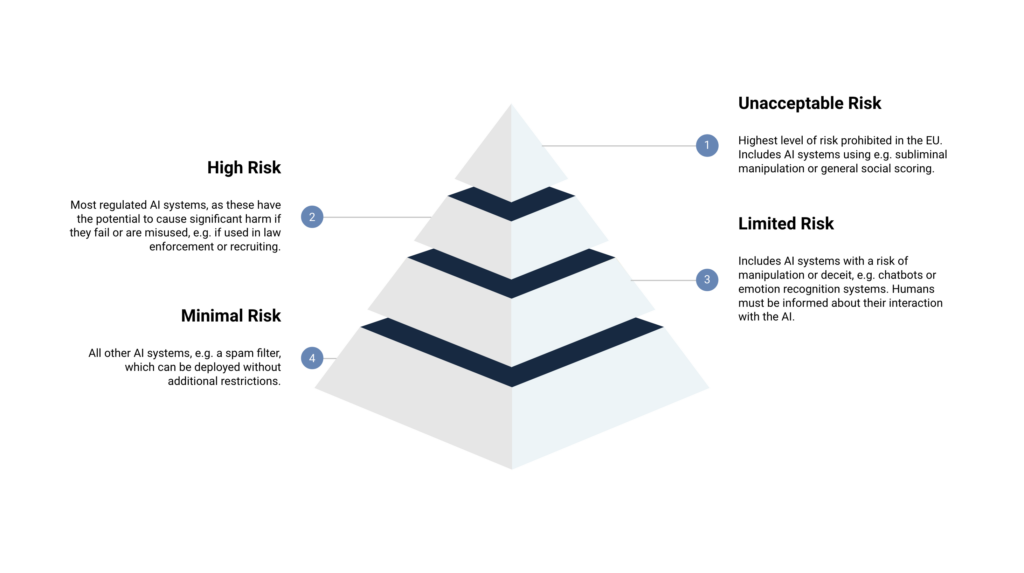

The AI Act adopts a risk-based approach to setting forth its provisions, categorizing AI applications based on their potential risks and levels of impact, and establishing obligations for human oversight of AI-powered technology and tools, including the establishment of an AI office. Primarily, it defines certain uses of AI as unacceptable, and such practices will be fully prohibited as the Act comes into law.

On the other hand, there is a class of AI systems that are defined as high-risk, and even though the deployment of such systems is acceptable, it is tied to stringent requirements and continuous monitoring protocols. In the following subsections, cases of unacceptable uses and other use cases are highlighted.

Unacceptable AI Uses

In general, the following AI practices are prohibited:

- The deployment of AI with manipulative or deceptive intentions, especially when it is likely to cause harm

- AI products that take advantage of people’s vulnerability due to age, disability, socio-economic condition, or other reasons

- Implementing discriminatory social scoring systems based on AI surveillance (this is probably related to China’s infamous Social Credit system)

- AI for conducting risk assessments for people’s likelihood to commit crimes based on personality traits

- Scraping facial images from the internet or CCTV footage to train AI facial recognition systems

- The use of biometric categorization systems to infer people’s race, political position, beliefs, sexual orientation, and the like

- Real-time remote biometric identification systems unless in exceptional cases necessitated by law enforcement

Acceptable But High-Risk AI Uses

Certain AI products are permitted for operations, but they are categorized as high-risk, meaning their creators must maintain a risk management system throughout the product’s lifecycle to foresee, evaluate, and address potential risks.

These AI products are defined as those that pose a significant risk of harm to people’s health, safety, or fundamental rights, or seek to manipulate their decision-making capacity. Such AI systems include products that fall under the EU’s product safety legislation, such as toys, cars, medical devices, etc. AI products deployed in specific fields are also considered in this category. These fields include law enforcement, employment services, education, migration, etc.

Transparency Obligations

The Act also designates obligations for general-purpose AI models, especially when it comes to transparency, which has long been an issue with AI. Such issues have been popularised with advancements in AI, particularly concerning how they affect trust and accountability and how the opacity of AI models can lead to bias and unethical decision-making.

In terms of transparency requirements, the following are required of providers of AI systems according to the EU AI Act:

- Users of an AI-powered product must be informed that they are interacting with an AI system

- Content, including text, images, and video, produced via generative AI must be properly marked as AI-generated.

- Where AI is deployed for emotion recognition or biometric categorization, the affected persons must be informed

- Creators of deep fake content, including image, audio, or video content must disclose that the content has been artificially generated or manipulated

Cybersecurity and the EU AI Act

While the AI Act is in full force, organizations are not absolved of their obligations concerning cybersecurity as detailed in other extant laws of the EU, including the General Data Protection Regulation, the Cybersecurity Act, the Cyber Resilience Act, the Data Governance Act, the Cyber Solidarity Act, and more.

Organizations are still expected to adhere to the highest standards of cybersecurity and resilience in their practices. This pertains particularly to providers who operate systems designated as high-risk according to the AI Act.

The Act explicitly acknowledges that cybersecurity “plays a crucial role in ensuring that AI systems are resilient against attempts to alter their use, behavior, and performance”, especially by malicious third parties. Thus, operators of AI systems should ensure a level of cybersecurity appropriate to the risks the tools portend.

For instance, although AI is not specifically mentioned in the GDPR, many provisions in the latter apply to AI systems and their creators or operators. In places where a seeming divergence exists, applicable interpretations can be made to fit the AI context. For instance, the GDPR’s principle of data minimization could be understood to mean making data less personally identifiable since large sizes of datasets are a huge part of what makes AI AI.

Also, the AI Act will have member states committing to working with startups to launch safe AI innovations by providing an enabling environment. Due to the fast pace of innovation in the space, AI startups cannot afford to ignore the important role that cybersecurity plays, and they must strive to achieve the highest standards of security.

Part of the major cybersecurity concerns for AI startups and organizations include data governance and access management, especially to prevent data poisoning or other forms of data corruption by malicious actors. For this, organizations need a robust access management tool such as Uniqkey that helps them keep their employees in check, including during vulnerable stages such as onboarding and offboarding.

Penalties and Rollout of AI Act Requirements

While the European Parliament officially approved the Act in March 2024, most of its provisions will not be fully applicable until 24 months after entry into force, and some provisions will enter into force later. Here’s the summary timeline for the rollout:

- 6 months: AI systems posing unacceptable risks will be banned

- 9 months: finalization of codes of practice for general-purpose AI (GPAI) systems

- 12 months: obligations for providers of GPAI systems will start to be applied

- 18 months: creating a monitoring template for high-risk AI providers

- 24 months: most obligations will come into force and member states should have implemented rules on penalties

- 36 months: obligations on high-risk AI systems shall be applied

- End of 2030: AI systems that are components of large-scale IT systems shall be expected to fully comply with the requirements of the Act

Noncompliance penalties can be up to €35 million or 7% of the company’s worldwide annual turnover, whichever is higher. These fines also apply to SMEs including startups

People will have the right to file complaints about AI systems to designated national authorities.

The Role Organizations Need to Play

Artificial Intelligence is a double-edged sword, as everyone can now see. To ensure that things don’t get out of hand in the quest to drive innovation at a rapid pace, then organizations in the AI industry must prepare to adhere to relevant EU regulations and maintain high standards of safety and security.